Outdated compliance practices aren't viable amid evolving market and regulatory changes. IQVIA’s award-winning, end-to-end quality management system streamlines the product value chain, accelerates market entry, safeguards companies and patient safety, and meets complex regulatory demands.

- Blogs

- The Human Edge of AI: Why Healthcare's AI Future Depends on Human Judgement

As we enter 2026, there can be no doubt that AI is transforming healthcare and MedTech at an incredible pace. From accelerating drug development to revolutionizing medical device innovation, AI promises capabilities that seemed impossible just a few years ago. Among the technological enthusiasm, however, a critical truth has emerged: the most successful AI implementations in healthcare aren't replacing human expertise but, rather, they are amplifying it.

For those working in Quality Assurance and Regulatory Affairs (QARA), the question is no longer if AI should be adopted. It’s how to implement it responsibly while retaining the human judgement, clinical expertise and institutional knowledge that keep patients safe.

The partnership model: Machines plus humans, not machines instead of humans

The most basic insight from healthcare AI deployment is this: AI best serves by leveraging human expertise, not functioning as a replacement for it. Successful organizations across pharmaceutical manufacturing, medical device innovation and post-market surveillance (PMS) embrace hybrid intelligence, where machine precision and human judgement come together to create capabilities neither could achieve alone.

Consider post-market surveillance (PMS) as an example. Traditional systems operate retrospectively: events occur, reports are filed, analysis takes place, and responses follow, often months after the fact. AI transforms this process by enabling proactive, real-time monitoring, where statistical anomalies and emerging patterns are detected immediately rather than discovered during periodic reviews.

Yet, the human role remains irreplaceable. While AI flags potential signals, it is humans who provide contextual interpretation, ethical reasoning, and regulatory judgment to determine the appropriate response.

In short: the machine provides signals; humans provide judgment.

Why regulators demand human oversight

Human-in-the-loop isn't just best practice; it's increasingly a regulatory requirement. The EU AI Act, US FDA guidance and emerging global frameworks worldwide require meaningful human oversight in healthcare AI systems. This reflects recognition that the consequences of healthcare decisions are too great to be delegated to algorithms, even highly accurate ones. Three capabilities distinguish human judgement from machine intelligence in the health sector:

- Clinical expertise for contextual interpretation, including patient-specific factors, comorbidities, and real-world conditions that may not have been represented in the training data.

- Ethical reasoning to navigate moral complexity, balance competing values, and make decisions that honor evidence and human dignity.

- Interpretation of regulations in a nuanced way, showing understanding of dynamic global frameworks; application of principles to novel situations; and judgement on compliance in ambiguous circumstances.

These are capabilities that cannot be automated: the irreplaceable edge of human beings.

Governance as Competitive Advantage, Not Compliance Burden

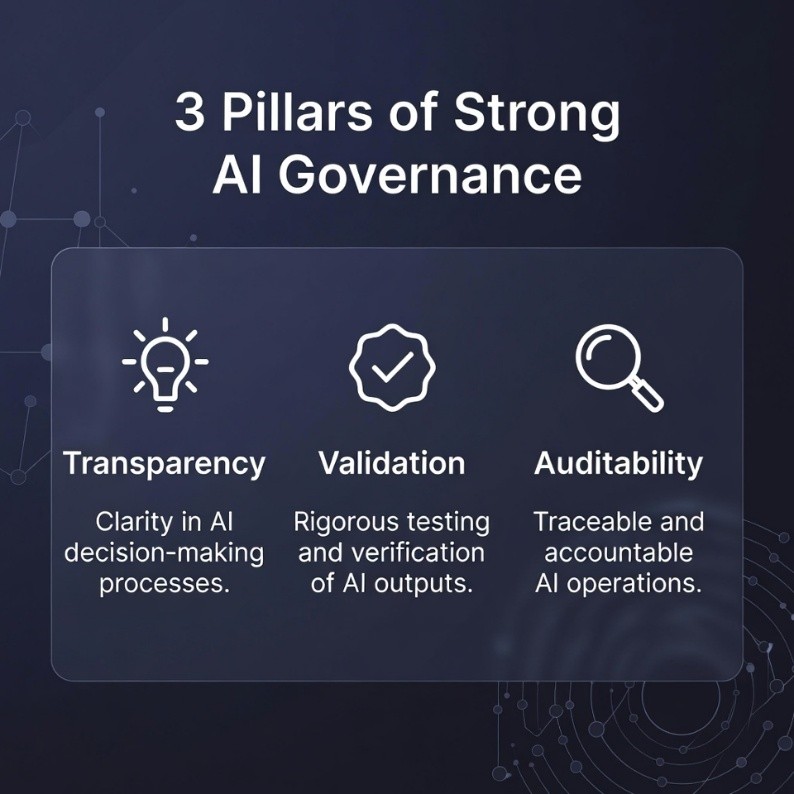

Governance is often seen by organizations as a regulatory overhead. In fact, the exact opposite is true: Governance is strategic infrastructure that enables innovation and protects patients while assuring compliance. Organizations with mature governance frameworks realize a range of concrete benefits, including speedier regulatory approvals, confidence among clinicians, reduced regulatory friction and better quality of processes. Three pillars define strong governance:

|

|

|

|

The competency cliff: How to protect institutional knowledge

With AI automating routine tasks, organizations face a strategic paradox: the very automation driving efficiency also eliminates the traditional opportunities junior professionals would get for learning. Meanwhile, experienced professionals who possess critical institutional knowledge approach retirement. This then creates what's been termed a "competency cliff" where there is an unexpected loss of expertise as automation simultaneously erases developmental pathways and veterans exit.

The risk is real. Junior QARA professionals who never performed manual reviews of manufacturing deviations may not have the judgement to understand when AI flags need to be escalated. Regulatory specialists who never authored submission content from scratch may not pick up on key nuances in AI-generated materials, while clinical reviewers who never personally evaluated adverse event reports may not apply healthy skepticism to AI assessments.

Strategic responses include structured knowledge transfer programs to capture institutional wisdom before it walks out the door, redesigned development paths that ensure junior professionals encounter complex scenarios that AI cannot handle and evolved veteran roles that shift experienced professionals toward exception handling, coaching and complex problem-solving.

Put simply: It is easier to teach AI applications to a domain expert than to teach decades of contextualized healthcare expertise to an AI engineer.

AI literacy as legal requirement

The EU AI Act changed AI literacy from being a competitive advantage to a compliance obligation. Any organization that deploys high-risk AI systems must demonstrate workforce competency with respect to understanding, using, and overseeing such technologies. Effective literacy programs address three dimensions:

- Usability ensures that employees can interpret system outputs, recognize when the results may warrant skepticism, execute appropriate oversight procedures and then escalate concerns appropriately.

- Trustability therefore helps users to detect potential bias, appreciate limitations in any validation, question results which are out of kilter and assess when levels of AI confidence should be used to guide decision-making.

- Cultivating an understanding of regulatory requirements, documentation expectations, change management procedures and individual accountability within governance frameworks.

The strategic opportunity: Up-skilling experienced professionals creates hybrid expertise that is potentially more valuable than hiring AI specialists without healthcare domain knowledge. A quality professional who understands both AI capabilities and pharmaceutical manufacturing brings perspective no pure technologist can match.

The ethical imperative: Patient benefit as ROI

Ethics in AI healthcare revolve around beneficence; the systems must demonstrably improve patient care and not just avoid harm. This elevates the bar beyond traditional software validation in that conventional systems have succeeded by functioning as specified without causing injury, while healthcare AI must actively enhance outcomes, safety or experience to justify deployment.

Every AI application has to answer the question: Does this improve patient outcomes? That forces discipline in resource allocation, ensuring investment flows to applications with a real clinical impact rather than impressive technology devoid of tangible medical value.

Conventional metrics of ROI-cost savings, efficiency gains, productivity increases-fall short in capturing the value of healthcare AI. A patient-centered approach to ROI encompasses the improvement of clinical outcomes, safety enhancement through earlier detection of adverse events or better patient experience and improvements in health equity to bridge the gap in quality of care or access.

This is beyond cost-saving. This is about assuring that patients experience high-quality health care.

From framework into reality: operationalization

Moving from principle to practice requires strategies that address real-world deployment challenges. Organizations realize the greatest success by:

- Starting small, with limited-scope pilots in domains where AI provides clear value without excessive risk: document management, surveillance signal detection and quality control applications. It builds capability before taking on high-stakes deployments.

- Building cross-functional teams composed of quality and regulatory professionals, clinical expertise, data scientists, IT professionals and ethics representatives. This diversity enables holistic evaluation from technical feasibility through ethical acceptability to regulatory viability.

- Managing expectations through transparency as to what AI can and cannot do. Provide realistic timelines that include validation burdens. Discuss risks equitably with benefits in a heavily regulated environment.

- Creating feedback mechanisms for capturing user feedback, monitoring performance metrics, analyzing error patterns and implementing improvements through controlled change management. Organizations that treat deployment as one-time implementation fail. Those that build continuous improvement into the system design succeed.

Real-world lessons

Abstract governance principles become concrete through real-world applications, and organizations must accordingly navigate regulatory constraints, balance competing priorities and build trust through demonstrated governance.

When IQVIA conducts AI demonstrations for QARA professionals, the same question is invariably posed within the first ten minutes: "How do you validate a self-learning, continuously evolving algorithm within a quality management system governed by 21 CFR Part 11, ISO 13485, and EU Medical Device Regulation?"

The question reflects a genuine dilemma. Traditional validation assumes stable systems with deterministic behavior - defining requirements, testing against specifications, documenting results and maintaining validated state through change control. Self-learning AI systems challenge this assumption fundamentally. Every patient interaction potentially modifies the algorithm. How do you maintain validation when the system changes continuously?

The pragmatic solution? Turn off self-learning. This sacrifices some AI adaptability but gains critical advantages: regulatory compliance via traditional validation pathways, audit trail integrity and performance predictability that enables meaningful testing and manageable risk via controlled updates. This is not a compromise but rather strategic decision-making, trading off deployment viability against theoretical optimality.

Good AI governance sometimes involves deliberately constraining technological capability to match regulatory reality.

The path ahead

This transformation of healthcare into an AI-driven endeavor is neither optional nor reversible. What confronts leaders is not whether to adopt AI, but how to implement it responsibly while preserving human expertise, ethical judgement and institutional knowledge that protect patients from harm.

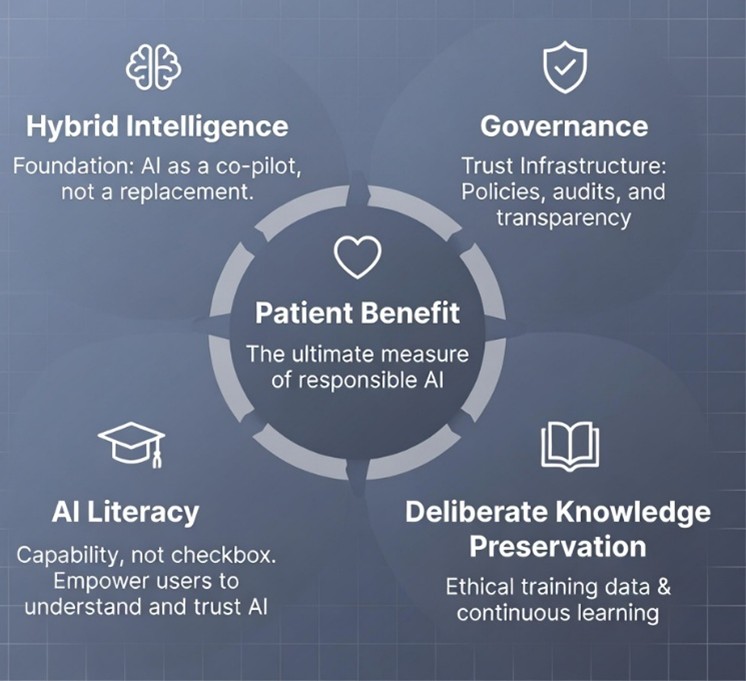

Responsible implementation is guided by five core elements:

|

|

|

|

The human edge of AI is not a limitation to be overcome; it is the very foundation upon which responsible healthcare innovation must be built. The organizations that use this as a framing set of considerations don't simply safely deploy AI; they create sustainable competitive advantage that's grounded in trust, expertise and demonstrated patient benefit.

This is the path forward: integrating machine precision with human judgement in health care AI that serves its ultimate purpose-improving patient care while honoring the irreplaceable value of human expertise, ethical reasoning and clinical wisdom.

You may also be interested in

From Silos to Synergy: Rethinking Technology Adoption in the Healthcare Ecosystem

Navigating the AI regulatory maze: Expert perspectives from healthcare executives - Part 1

Related solutions

Discover how an end-to-end eQMS solution empowers QARA professionals to remain agile, well-informed, and proactive in ensuring the safety and efficacy of medical devices.

Explore how SmartSolve® eQMS for Pharma, an award-winning enterprise quality management system, seamlessly integrates quality into every aspect of life sciences operations. It empowers organizations to elevate quality standards, ensure regulatory compliance, accelerate time-to-market, and manage risks effectively.