Insights are trapped in mountains of text. NLP sets them free.

- Blogs

- Prompt and Proper: How IQVIA is using Declarative LLM Prompting to build Healthcare-grade AI™

The potential for AI in healthcare is something that excites and motivates everything that we do within the IQVIA NLP team. IQVIA has worked in this space for several years, and the potential for Artificial Intelligence to ease the burden of healthcare delivery and improve patient lives has been a north star for our organization.

In recent years, Large Language Models (LLMs) have emerged as a transformative force in the field of Artificial Intelligence. These powerful AI models, trained on vast amounts of text data, can understand, generate, and manipulate human language with impressive accuracy and fluency.

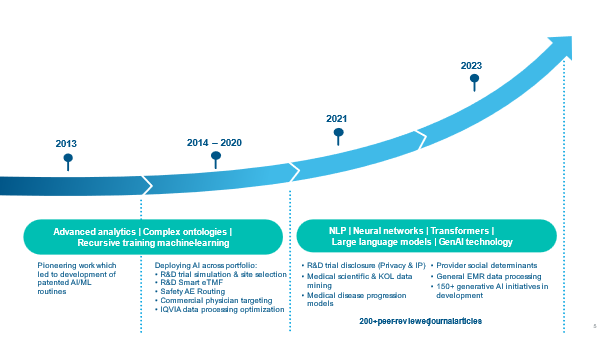

Figure 1: A rich history of developing AI for healthcare: IQVIA’s award-winning AI methodologies validated on 100+ diseases.

Building Healthcare-grade AI using LLMs presents unique technical and governance challenges due, not least, to the inherent complexity and sensitivity (see the Privacy Analytics article on data sharing risks with ChatGPT) of healthcare data. This blog post will focus on some of the key technical barriers to effectively realize the value of LLMs in this space, specifically around accuracy, complexity and prompting limitations:

Low accuracy and reliability of generated outputs

- Medical information is nuanced, context-dependent, and subject to frequent updates causing LLMs to often generate plausible but incorrect responses or struggle with medical terminology and concepts.

- Inaccuracies in AI outputs could have serious consequences for patient care and decision-making.

Complexity and diversity of medical data

- Healthcare data encompasses structured and unstructured formats, including electronic health records, clinical notes, medical images, and lab results.

- Integrating and pre-processing heterogeneous data to create suitable prompts for LLMs is time-consuming and challenging.

Limitations of traditional prompting methods

- Crafting effective prompts requires significant manual effort and domain expertise, creating a bottleneck in the development process.

- LLM performance can be sensitive to the specific wording and structure of prompts, leading to inconsistencies and variability in generated outputs.

To address these challenges and build reliable Healthcare-grade AI using LLMs, innovative approaches are necessary. In this blog, we will explore how robust declarative prompting frameworks, such as those being developed by IQVIA, are spearheading AI development for healthcare applications by:

- Addressing the limitations of traditional prompting methods

- Enabling the creation of more accurate, efficient, and trustworthy AI solutions

What is LLM Prompting?

At its core, prompting involves providing an LLM with a specific input or context, known as a prompt, to guide its output towards a desired task or outcome. The prompt can take many forms, such as a question, a partial sentence, or a set of instructions, depending on the intended use case. By carefully crafting prompts, developers can steer the LLM's behaviour and generate relevant, coherent, and accurate responses.

Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

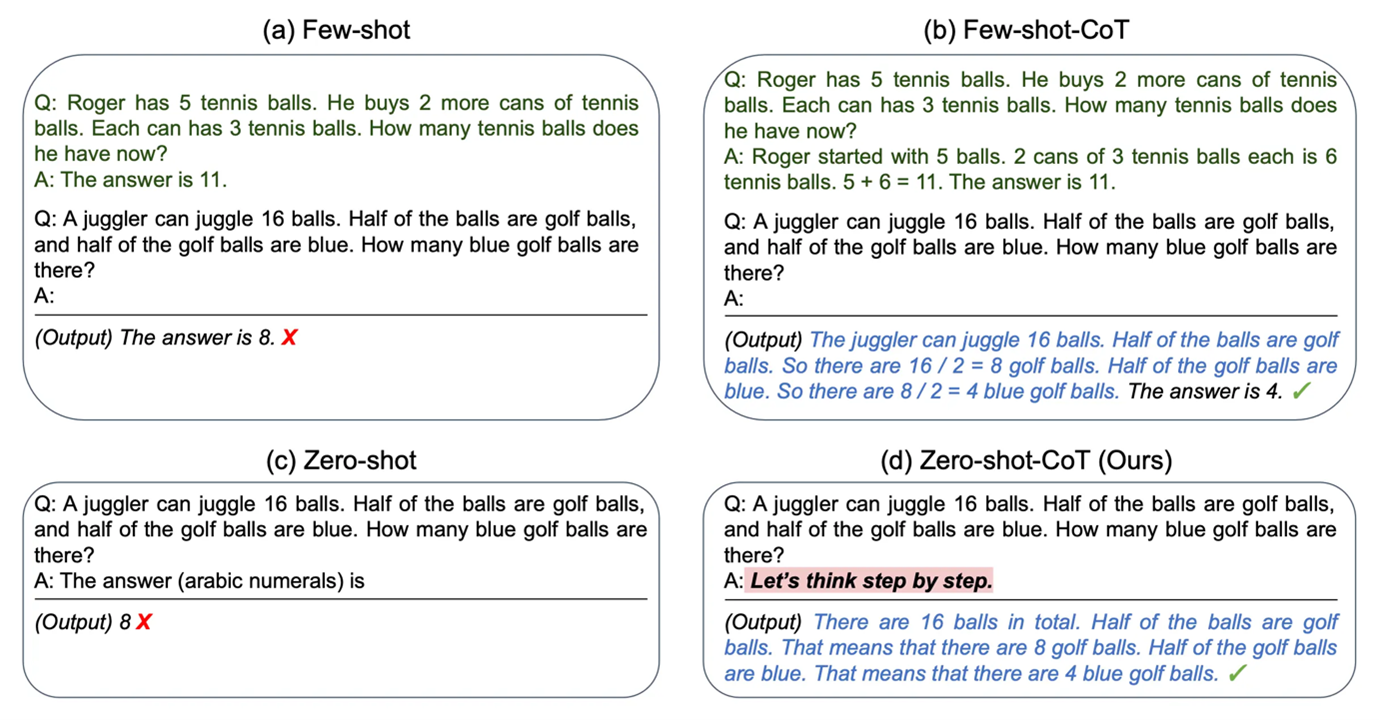

There are several types of prompting techniques employed in LLM-based AI development:

- Zero-Shot Prompting: In this technique, the LLM is given a prompt without any prior examples or training for the specific task. The model relies on its pre-existing knowledge and understanding of language to generate a relevant output.

- Few-Shot Prompting: This approach involves providing the LLM with a small number of examples or demonstrations of the desired task, along with the actual input prompt. The model learns from these examples and generates an appropriate response based on the given context.

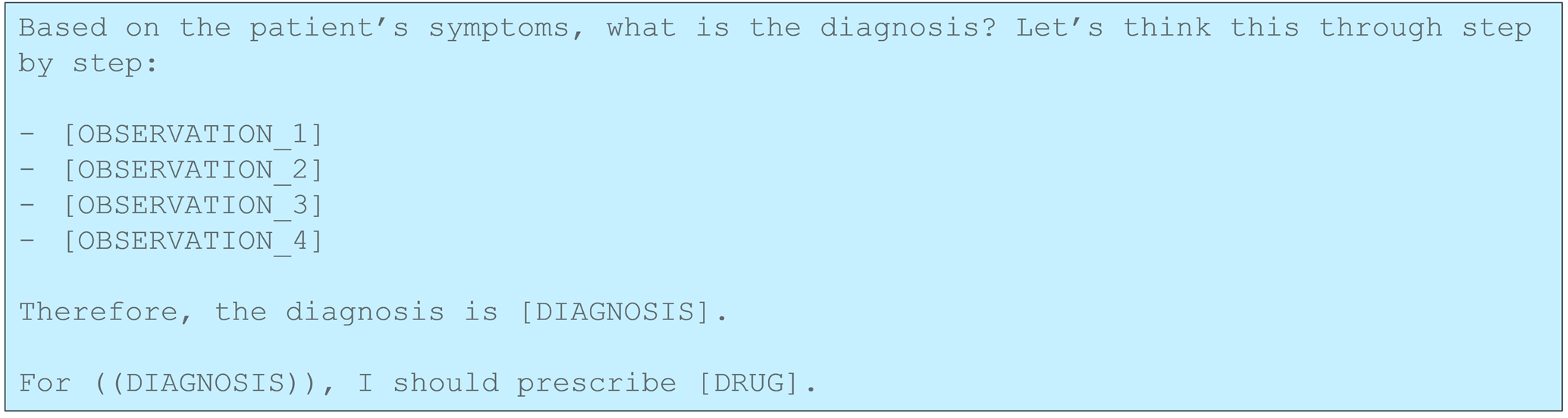

- Chain-of-Thought Prompting: This method encourages the LLM to break down complex problems into intermediate reasoning steps. By providing a prompt that includes a series of sub-questions or thought processes, developers can guide the model to generate more transparent and interpretable responses.

Prompting has become an essential tool in the AI developer's toolkit, enabling them to adapt LLMs to a wide range of applications, from answering questions and generating reports to assisting with decision-making and problem-solving. However, as we will explore further in the next section, more robust LLM prompting methods are required to build Healthcare-grade AI using LLMs.

Declarative Prompting: A Game-Changer for Healthcare AI

The declarative prompting paradigm has the potential to be a game-changer in the development of Healthcare-grade AI using LLMs. This approach to interacting with LLMs offers a more structured, intuitive, and efficient mechanism to crafting prompts, enabling developers to create high-quality AI solutions tailored to the unique needs of the healthcare industry.

The key advantages of declarative prompting for Healthcare-grade AI include:

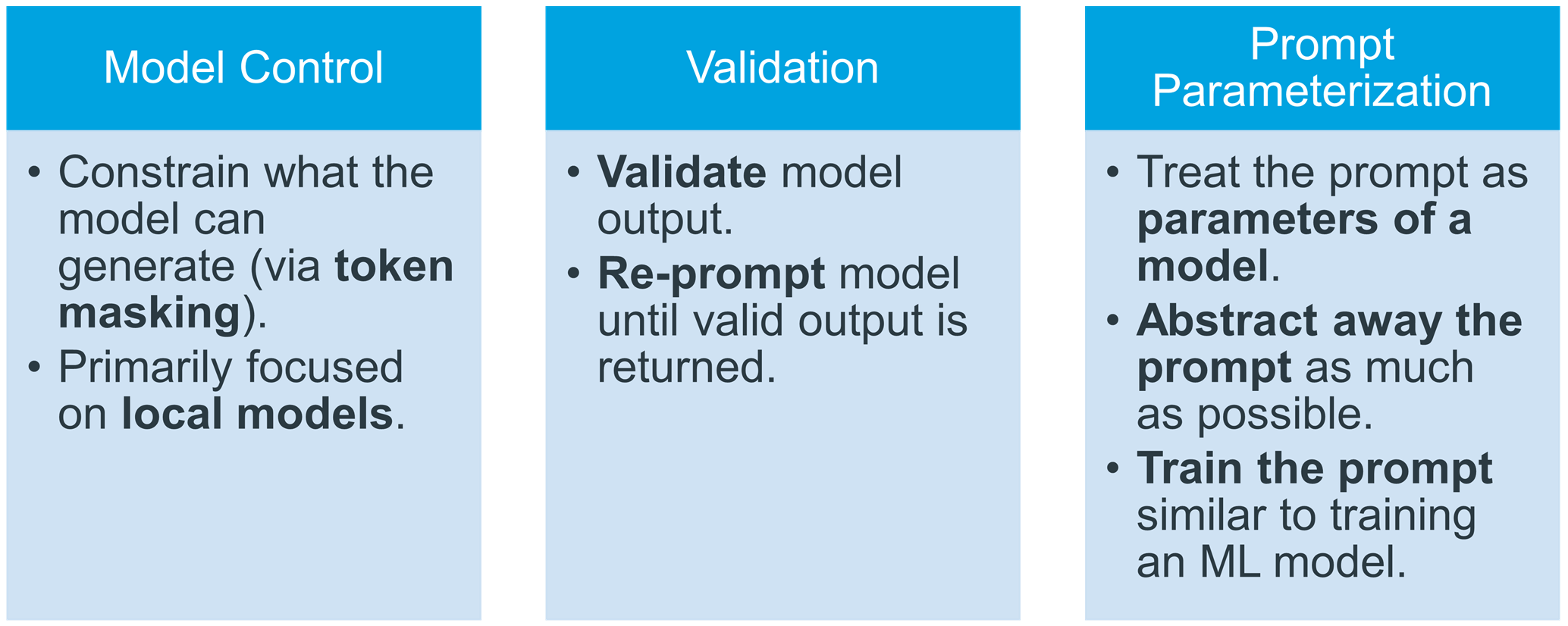

Enhanced model control

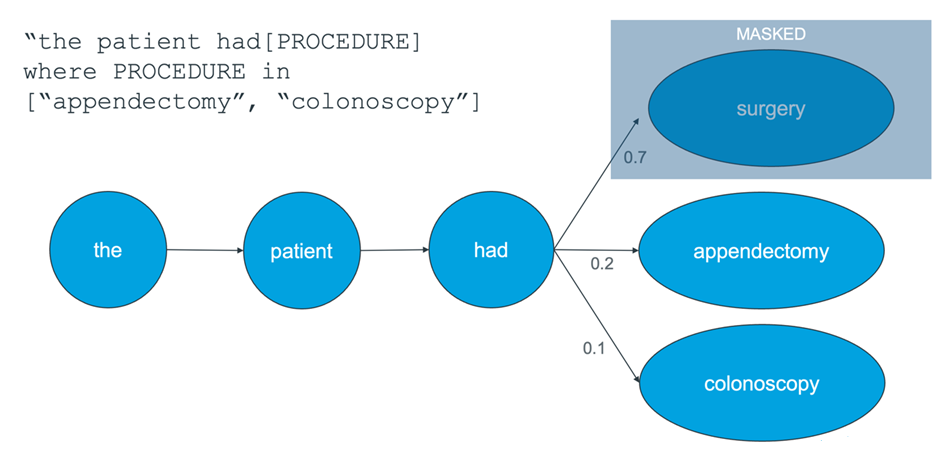

In the context of language modelling a token is a small unit of text, typically a word or a part of a word, that is used by the language model during processing. For example, the word “cat” might be represented as a single token, while “unbelievable” might be broken down into multiple tokens eg: “un”, “believe”, and “able”. Token masking allows developers to constrain the LLM's output by specifying which tokens or token types are allowed or disallowed. This enables fine-grained control over the generated content, preventing the model from producing irrelevant or inappropriate responses.

Figure 2: Token masking: LLM output is controlled by masking tokens that should not be generated.

Declarative prompting also enables easier interleaving of prompts and generation which allows for a more granular and interactive approach to building Healthcare-grade AI solutions. Instead of relying on a single, monolithic prompt to guide the LLM's output, developers can create a series of smaller, targeted prompts that are dynamically injected into the generation process based on the context and the desired outcome.

Interleaved Prompt and Generation

• Several libraries allow you to interleave prompt and generated text, storing variables and referencing previous generations.

- Some also do this more efficiently than prompting the model several times.

• In the following example:

- Variables in square brackets [] are generated text

- Variables in double round brackets (()) reference previously generated text

- All other text is part of the prompt.

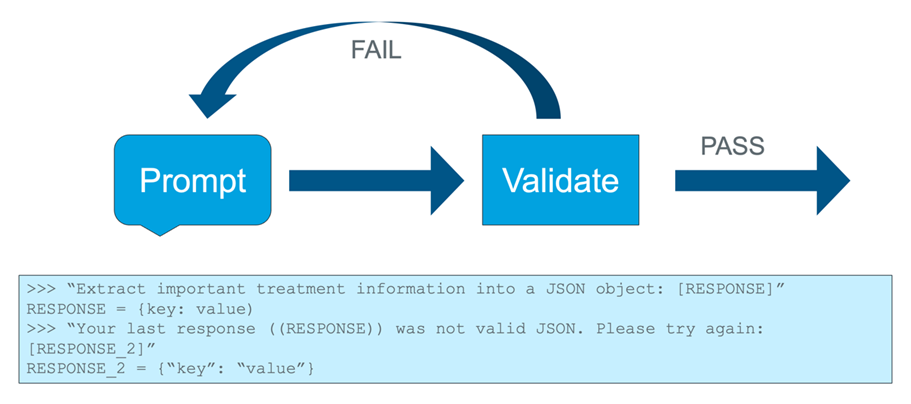

Robust validation and re-prompting techniques

Declarative prompting enables advanced validation mechanisms to assess the quality and accuracy of the generated outputs. If the output fails to meet the specified validation criteria, the model can be automatically re-prompted with additional context or constraints. Iterative validation and re-prompting help refine the model's responses and ensure that the final output meets the required standards of Healthcare-grade AI.

Figure 3: If validation fails, re-prompting occurs a designated number of times, usually including the invalid response.

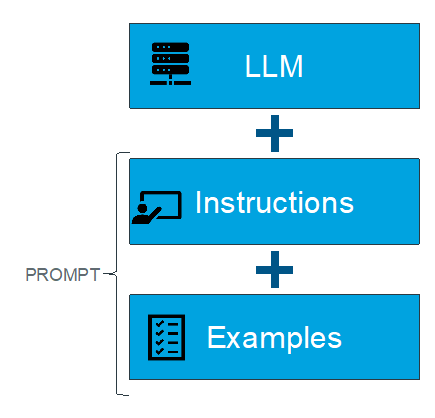

Prompt parameterization and abstraction

With declarative prompting developers can easily treat prompts as parameterized inputs to the LLM. Prompt parameters can be abstracted away from the specific prompt text, enabling more flexible and reusable prompt templates.

- This paradigm treats prompts and examples as additional parameters of the model.

- Minimal engineering of prompts

- Modules (representing separate prompting steps) are defined much like they are in PyTorch, or other machine learning packages.

- For example, a QA pipeline featuring document retrieval might have the following defined steps:

- question -> context

- question, context -> answer

- Instructions and examples can be optimized via training data and customizable metrics.

- E.g., exact match, partial match, similarity

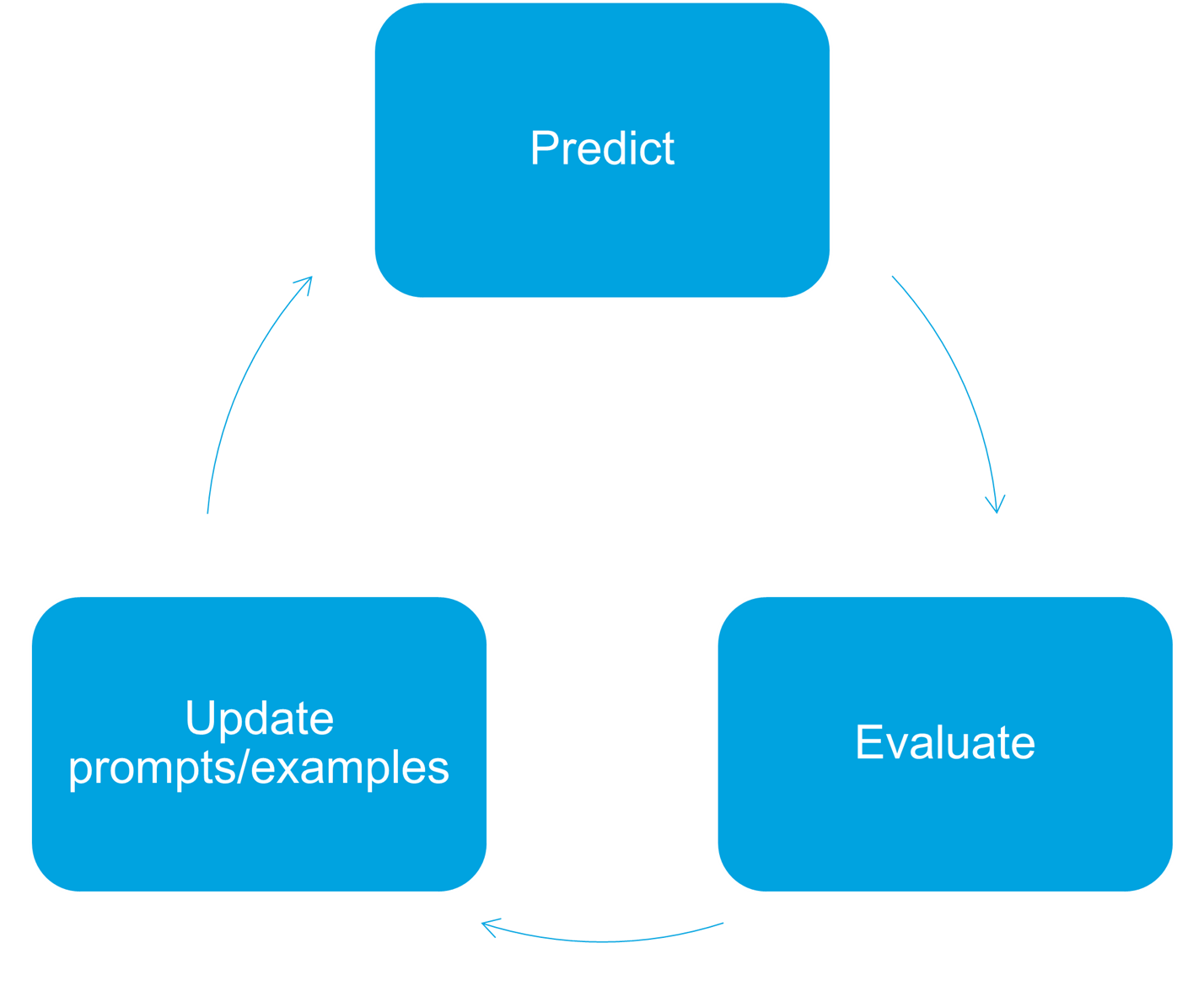

By training the prompt parameters using techniques like gradient descent, developers can also optimize the prompts for specific healthcare AI tasks and improve model performance.

- Different optimizers are used to improve different parts of the prompt.

- Using a test set, an optimizer might:

- Choose the best examples to maximize the evaluation metric.

- Rewrite the prompt using an LLM.

- Rewritten prompts that increase the evaluation metric are iterated on further.

- The optimizer can also attempt to bootstrap unlabeled examples, having the LLM generate its own labels.

- Prompt rewriting and label bootstrapping is often done with a larger teacher model.

IQVIA’s Approach

IQVIA, a global leader in healthcare analytics and technology, has been at the forefront of building cutting-edge, Healthcare-grade AI solutions. By harnessing the power of declarative prompting, IQVIA is developing a robust and efficient approach to creating AI applications that address the unique challenges and requirements of the healthcare industry.

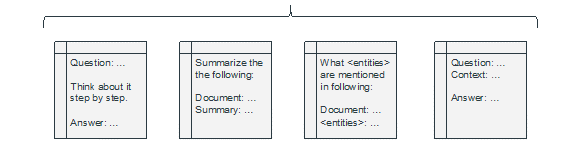

Figure 4: A framework for intelligent prompting.

- From our research and work with customers, we know:

- The type of tasks we frequently encounter (QA, information extraction, etc.)

- How to present these tasks to LLMs

- Effective prompting methods for these tasks (chain-of-thought, ReAct, etc.)

- We are combining this knowledge to make an accessible and configurable set of capabilities on top of these declarative methods to streamline prompt development.

At the core of IQVIA's approach is a proprietary declarative prompting framework that expands on existing general purpose open-source declarative prompting libraries (e.g.: Guidance, Outlines, LMQL, DSPy) with deep healthcare domain expertise, and machine learning to enable IQVIA's AI developers, and clients to create highly structured, modular, and parameterized prompts that can be easily adapted and optimized for a wide range of healthcare AI tasks. Key features of IQVIA's declarative prompting approach include:

Domain-specific prompt libraries

Curated libraries of prompt templates and components specifically designed for healthcare applications. These libraries encapsulate the domain knowledge and best practices of healthcare experts, ensuring that the prompts are clinically relevant and accurate.

Example use cases:

- A prompt library for radiology that includes templates for describing imaging findings, generating differential diagnoses, and recommending follow-up tests based on specific modalities (e.g., X-ray, CT, MRI).

- A prompt library for oncology that provides templates for generating personalized treatment plans based on cancer type, stage, biomarkers, and patient preferences.

Hierarchical prompt composition

IQVIA's framework looks to enable hierarchical composition of prompts, enabling developers to create complex AI solutions by combining and nesting simpler prompt components. This modular approach facilitates the reuse and sharing of prompt components across different AI applications, improving efficiency and consistency

Example use cases:

- A clinical decision support system for managing diabetes that combines prompts for patient demographics, medical history, lab results (e.g., HbA1c, blood glucose), and medication adherence to generate comprehensive treatment recommendations.

- A patient triage system that integrates prompts for symptoms, vital signs, and risk factors to determine the appropriate level of care (e.g., self-care, primary care, emergency department) and provide guidance to patients.

Integrated validation and re-prompting workflows

IQVIA's framework includes built-in validation mechanisms to assess the quality and accuracy of the generated outputs against domain-specific criteria. Automated re-prompting workflows enable the iterative refinement of prompts based on validation results, ensuring that the final output meets the required standards of Healthcare-grade AI

Example use cases:

- A workflow for validating the accuracy of generated medical codes against established coding guidelines (e.g., ICD-10, CPT) and re-prompting the model with additional context when errors are detected.

- A workflow for assessing the clinical appropriateness of generated treatment recommendations by comparing them against evidence-based guidelines and expert consensus, with re-prompting triggered when recommendations deviate from established standards.

Prompt optimization using machine learning

IQVIA leverages advanced machine learning techniques to optimize the prompt parameters for specific healthcare AI tasks. By treating prompts as learnable parameters and using techniques like gradient descent, our framework looks to enable automatic fine-tuning of prompts to improve the performance and efficiency of the resulting AI solutions

Example use cases:

- Optimizing prompts for a clinical trial matching system to improve the precision and recall of patient-trial matches, reducing the manual effort required for eligibility screening.

- Fine-tuning prompts for a pharmacovigilance system to increase the sensitivity and specificity of adverse event detection from unstructured data sources, enabling earlier identification of potential safety signals.

We hope you’ve enjoyed this discussion, and would welcome your thoughts and comments. Contact us if you’d like more information.

References

Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

Efficient Guided Generation for Large Language Models

LMQL: A programming language for large language models

Guidance: A guidance language for controlling large language models

DSPy: Compiling Declarative Language Model Calls into Self-Improving Pipelines