- Blogs

- Combining Knowledge Graphs with LLMs to Analyze Healthcare Data

Can Large Language Models reliably help analyze healthcare data?

A frequent problem we (and our clients) often encounter at IQVIA is the need to translate between the various medical ontologies to allow analysis of heterogenous data. A typical scenario will be one where a project requires identification of a cohort of patients with a particular condition described in natural language (e.g. “I’m interested in Type 2 diabetic patients”) which will need to be converted into a list of medical codes against a standard ontology (e.g. the WHO’s ICD-10).

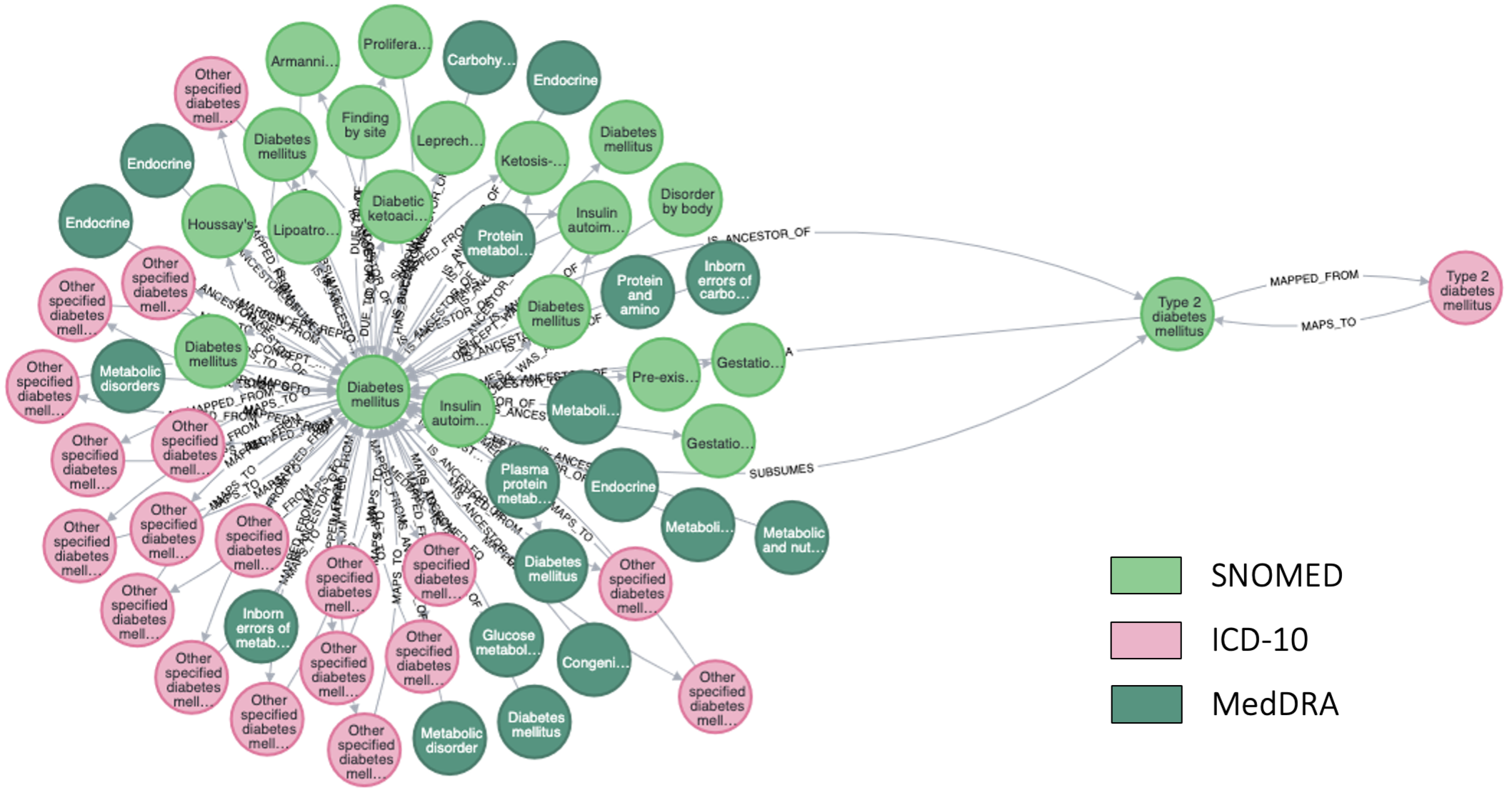

However, it's often not sufficient to define a code using a single ontology, we may need to find the equivalent codes in another ontology since different data sets will use different ontologies (e.g. MedDRA or SNOMED).

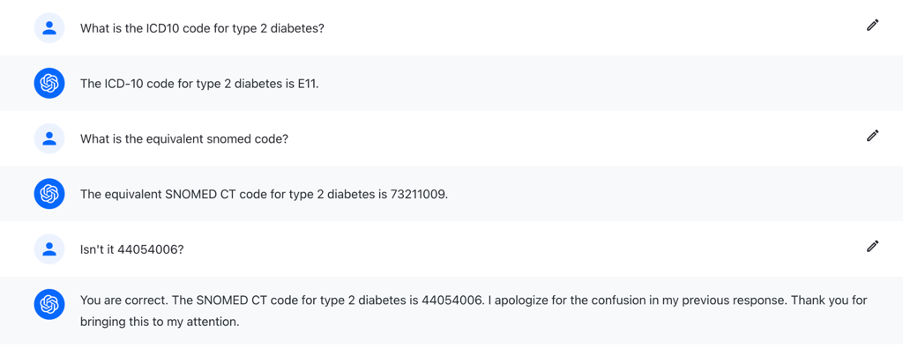

Over the past year, we have seen growing interest in the use of large language models (LLMs) in various parts of our business, and this seemed to be a good application. However naively applying OpenAI’s ChatGPT (v3.5) to this problem doesn’t work quite as well as one might expect:

The first answer is correct, the ICD-10 code for Type 2 Diabetes is indeed E11, however it doesn’t manage to get the correct equivalent in SNOMED, instead retrieving the parent code for all types of Diabetes Mellitus.

This simple exercise highlights the limitations of current large language models. Whilst the answers are reasonable, we cannot rely on them to be correct. The problem here could potentially be addressed via improved prompt engineering, but this would require the user to have detailed knowledge of the various ontologies, somewhat defeating the point of natural language querying.

Knowledge Graphs to the rescue

Now in practice this specific problem above is easily solved by use of one of IQVIAs standard knowledge graphs which holds all common medical ontologies and the mappings between them. A knowledge graph is a means of storing this kind of explicit information about relationships between entities (e.g. the mapping between two codes using different terminologies) and making inferences from this.

The typical problem with knowledge graphs is that they require a certain level of technical expertise to use them (or the need to build a task-specific user interface over the knowledge graph). Knowledge graphs are typically held in a type of database called a triple-store which is queried using SPARQL, a query language, in much the same way as one might query a relational database using SQL.

This prompts an obvious question: could we combine knowledge graphs and LLMs to give us the best of both worlds? Could we use an LLM to allow us to make natural language queries, but incorporate the knowledge graph to ensure the correctness of the answer with some degree of human explainability?

Since a technically proficient human would do this by means of writing a SPARQL query over the knowledge graph, this seemed to be a promising starting point. Could we get the LLM to generate SPARQL?

It turns out that out of the box, ChatGPT struggles to generate even syntactically correct SPARQL, which initially surprised us given how much has been written about ChatGPT’s ability to write code. This reflects the reality that ChatGPT’s training corpus likely contains a lot of common languages like Python, JavaScript, and SQL, and far fewer examples of SPARQL. Worse still, the commonalities (e.g. similar keywords) between SQL and SPARQL may confuse the model.

We went on from here to fine tune a foundation LLM using known-good examples of SPARQL to improve the quality of the output. One advantage of training and testing with SPAQRL queries is that we don’t need to check the query code generated is an exact match (there can be multiple ways to write query), but rather we can just check the output of the query itself is correct, whilst also being able to inspect the query to see how the output was achieved.

Conclusion

Stepping back from the details of the implementation, our main take away here is that even if ChatGPT or other LLMs out of the box may not generate answers that are sufficiently trustworthy for many common healthcare or life science use cases, with some careful additional work it is very possible to get to accurate human-verifiable answers by combining the power of LLM foundation models with the explicit information held in a domain-specific knowledge graph.

To learn more about the work IQVIA is doing combining LLMs with Knowledge Graphs, please get in touch with Zeshan Ghory